In real projects, success depends on what real users do, not what teams assume. Under tight attention spans, testing Webflow tool decisions against actual behavior gives reliable answers.

A widely cited web analytics study shows about 55 percent of visitors leave within 15 seconds, which means only a narrow window to persuade.

This guide turns Webflow user testing and A/B testing in Webflow into a practical, stepwise process applied to real pages and components.

Website User Testing: Core Methods

Strong testing combines realistic tasks, representative devices, and a clean plan for analysis. In early cycles, fast, lightweight methods surface obvious issues and inform later deep dives.

Across later cycles, moderated sessions and structured observation capture nuance that numbers alone miss. After each pass, apply fixes, rerun targeted checks, and move forward with clearer signals.

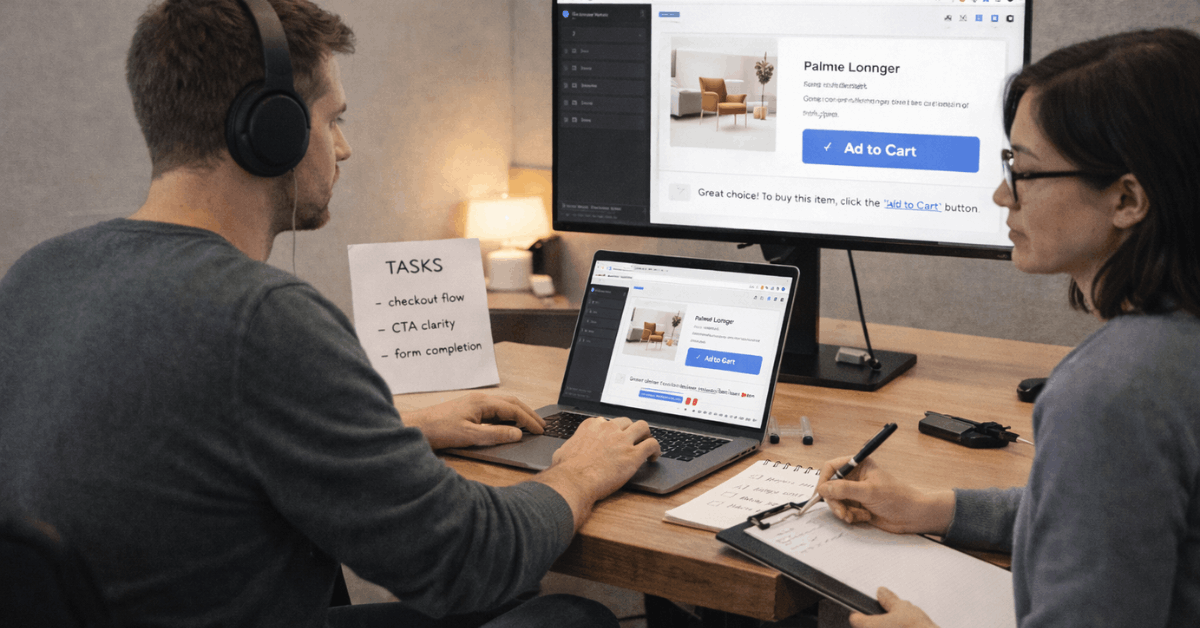

On-Site Testing

In-person sessions inside a lab or controlled room let moderators standardize instructions and observe detail. This approach suits high-stakes flows such as checkouts or onboarding where small barriers compound.

Costs run higher due to space, staff, and setup, although data quality often justifies the expense. Recruitment must exclude insiders to avoid familiarity bias that masks genuine friction.

Unmoderated Testing

Remote, task-based assessments happen on participants’ devices without a live moderator. Natural environments reveal authentic interruptions, device quirks, and everyday browsing habits that shape outcomes.

Costs drop substantially, which enables more frequent rounds across sprints. Tradeoffs include thinner qualitative context and occasional ambiguity in why a behavior occurred.

Moderated Testing

Live moderators guide participants through tasks in person or over video while avoiding leading prompts. This blends realism and qualitative depth, since participants can voice thoughts aloud as they work.

Skilled facilitation prevents bias while screen capture and notes preserve evidence for later review. Teams often pair this method with light analytics for triangulation.

Guerrilla Testing

Rapid intercepts in public spaces provide quick reads on clarity, labels, and first-impression affordances.

Results arrive fast because recruitment happens on the spot without pre-screening. Sample quality varies, so treat this as a rough filter rather than a final verdict. Use insights to refine copy, icons, and hierarchy, then validate with more targeted cohorts.

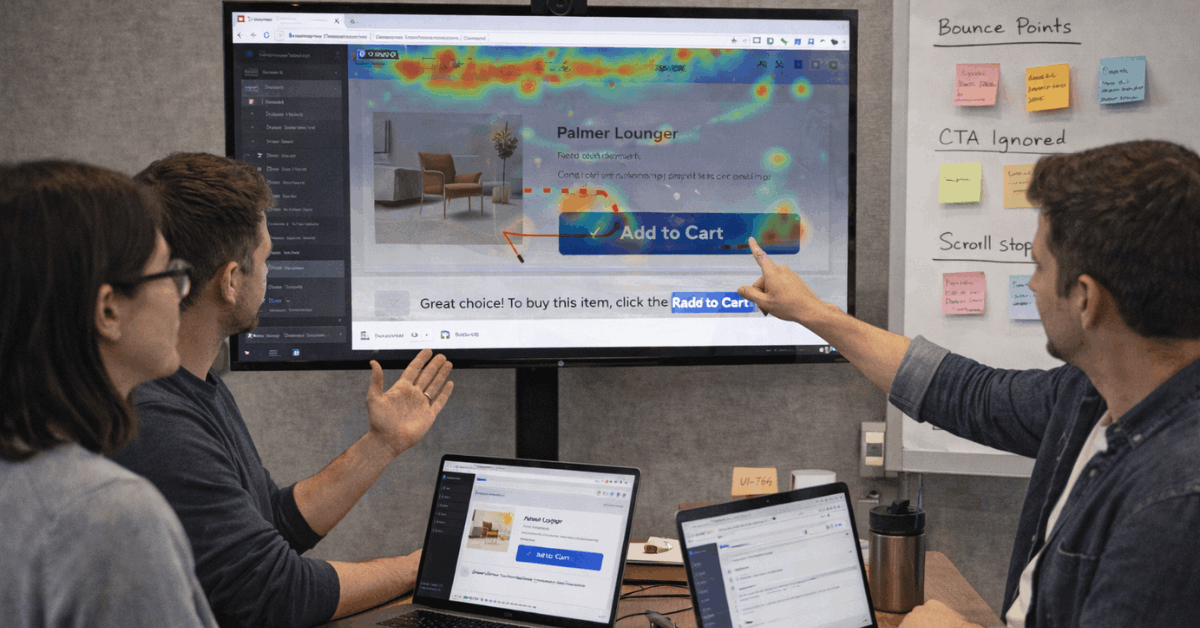

Screen Recording

Session videos reveal scroll patterns, cursor travel, rage clicks, and abandonment points across devices. Asynchronous review lets teams pause, replay, and tag moments for discussion during standups.

Tools that support consent, masking, and retention controls keep privacy intact. Pair recordings with lightweight surveys to tie observed behavior to stated intent.

POEMS Framework: Capturing Context

During observation, a POEMS checklist keeps attention on the broader system around the test. People captures demographics, roles, and behaviors that influence task framing and expectations.

Objects lists tools, furniture, devices, and peripherals that shape posture, speed, and input accuracy.

Environments tracks lighting, noise, and temperature which subtly affect satisfaction and patience. Messages records wording, tone, and jargon that might confuse or mislead. Services notes any supporting systems or policies that add steps or introduce delays.

Essential Webflow Usability Metrics

Clear metrics focus teams, prevent aimless debates, and simplify reporting. In product sprints, pick a small set that reflects goals, device mix, and traffic sources.

After each release, compare deltas rather than absolute values to see real movement. Because no single number tells the whole story, combine quantitative and qualitative views.

Accessibility

Measure against the Web Content Accessibility Guidelines Level AAA where feasible, then document any justified exceptions. Screen reader flows, focus states, contrast ratios, and keyboard traps require regular checks.

Alt text quality and heading structure influence both comprehension and navigation speed. Treat accessibility as ongoing practice rather than a one-time audit.

Efficiency

Track time-to-task, scroll depth, and path length to a clear conversion or micro-conversion.

Fewer steps and clearer labels shorten effort and lift completion rates. Funnel analysis highlights detours, dead ends, and redundant confirmations that slow progress. Small layout shifts near CTAs can reduce hesitation and increase decisive clicks.

Errors

Catalog both system errors and user-initiated mistakes such as misclicks or wrong-path selections. Broken links, vague empty states, and ambiguous button labels inflate error counts.

Clear copy, inline validation, and resilient fallbacks stabilize outcomes under real conditions. Trend errors by device and browser to prioritize fixes that move the largest segments.

Satisfaction

Use quick, targeted measures such as post-task ratings and brief comment prompts. Correlate satisfaction shifts with specific changes in copy, spacing, or interaction cost.

Sustained improvements typically follow clarity upgrades rather than decorative flourishes. When comments cite trust, speed, or control, conversion metrics usually move in the same direction.

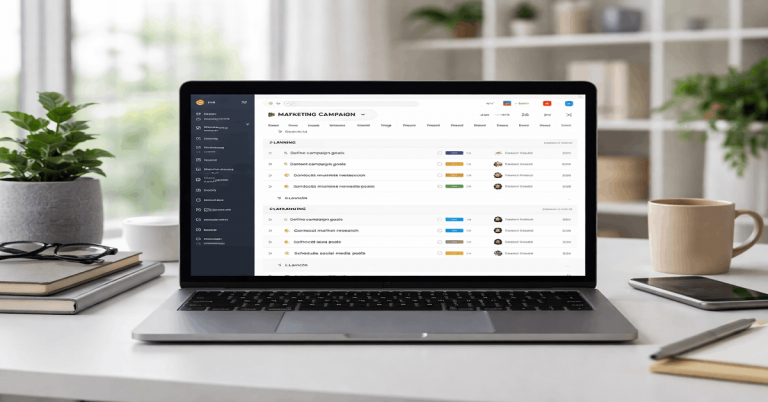

Planning And Running Tests That Matter

In early discovery, define outcomes in plain language so every stakeholder shares the same target. Next, select methods that match constraints for time, budget, and access to participants.

Recruitment focuses on actual audience segments, not convenience samples that skew results. After execution, synthesize evidence into a single, prioritized backlog so fixes ship without delay.

A/B Testing In Webflow: Types And Benefits

In optimization cycles, controlled experiments turn opinions into measurable outcomes. Classic A/B pits two variants of a single element or page against each other. Split URL trials compare different page structures under separate addresses, which suits layout overhauls.

Multivariate tests in Webflow evaluate combinations of elements to see interaction effects across headlines, images, and CTAs. Multi-page funnel tests apply coordinated changes across steps to measure journey-level lift. Because outcomes are numeric, A/B testing informs design choices through confidence rather than intuition.

Conversion rate, click-through, and task completion become the deciding evidence, not preference. Risk also drops, since small, isolated changes reveal impact before full rollouts. Over time, a cadence of experiments compounds into durable gains across acquisition and retention.

Webflow Testing Best Practices

Strong practice prevents false positives and wasted cycles. The points below keep experiments clean, interpretable, and aligned to business impact.

- Set A Single Goal Per Test: In each run, choose one outcome such as click-through on a specific CTA or form completion rate. Narrow focus prevents diluted interpretation and muddled next steps.

- Test One Element At A Time: Isolate a headline, image, layout block, or button label so attribution stays clear. Stacked changes blur causality and inflate uncertainty.

- Run Long Enough For Significance: Volume, seasonality, and traffic mix influence how long a test should run. Premature stops often select noise rather than a real winner.

- Segment Where It Truly Matters: Device, geography, or traffic source segments reveal differences hidden in aggregate. Segment sparingly to avoid slicing data into unreliable fragments.

- Ship, Log, And Iterate: Once a winner emerges, ship it, record the decision, and queue the next hypothesis. Momentum builds when experiments roll continuously rather than sporadically.

Tools That Fit Real Webflow Workflows

Tool selection depends on stack, team skills, and data needs. In Webflow projects, native or near-native tools lower setup cost and reduce brittleness.

For broader stacks, enterprise platforms layer on advanced targeting, personalization, and governance. Cost must follow value, so start simple and expand as repeatable gains appear.

Optibase

Optibase for Webflow integrates inside Designer and lets teams define variants, target audiences, and conversions. The free tier covers basic needs, while paid tiers expand monthly visitor limits and features. Simple setup encourages frequent testing across components that matter to revenue.

Webflow Optimize

Webflow Optimize brings built-in experimentation and personalization into the platform. Native integration reduces code overhead and smooths handoff between design and marketing. For teams already anchored in Webflow, centralizing tests streamlines cadence and governance.

Hotjar

Hotjar pairs heatmaps, feedback widgets, and session videos to explain why variants win or lose. Although not a full A/B suite, it validates hypotheses with qualitative context. Webflow screen recordings help bridge the gap between numbers and lived behavior.

Crazy Egg

Crazy Egg offers click maps and lightweight experimentation suitable for early-stage programs. Visual overlays make quick wins visible for nontechnical stakeholders. Pair it with structured hypotheses and clean KPIs to avoid vanity readings.

Google Analytics 4

GA4 tracks events, conversions, and funnels that underpin experiment analysis. While experiments need an external driver, GA4 confirms impact on downstream behavior. Careful event naming and consistent parameters keep reports reliable.

VWO

VWO adds multivariate, split URL, and advanced targeting alongside heatmaps and recordings. Strong reporting and guardrails suit teams graduating beyond basic tests. Implementation requires discipline, although returns grow with scale.

AB Tasty

AB Tasty targets enterprise needs across experimentation and personalization. Rich audience rules and journey testing support complex commerce and content sites. Governance features help align marketing, product, and legal requirements.

Adobe Target

Adobe Target fits organizations deep in the Adobe stack. Automated personalization and robust segments power sophisticated campaigns. Integration with analytics platforms supports unified reporting across channels.

Step-By-Step: Running An A/B Test In Optibase

Clean execution starts in Designer, continues in the test console, and ends in analysis. In this condensed walkthrough, the goal is a single CTA improvement measured by verified clicks.

Keep scope tight, keep tracking explicit, and verify that both variants render correctly on target devices. After publishing, monitor early stability, then let the test run to significance.

- Install And Connect: Create an Optibase account, install the app in Webflow Designer, and paste the API key. Publish after adding the provided script to the project’s custom code.

- Define The Conversion: Select the primary CTA element and set the conversion to Click. Confirm the selector and fire a quick preview to validate event capture across devices.

- Create Variants: Duplicate the CTA element and edit the second label, then assign A and B inside Optibase. Set traffic split to fifty-fifty unless a weighted design is required.

- Target And Launch: Choose audience rules such as device class or geolocation if needed. Launch the test, verify both variants render as intended, and start your run timer.

- Analyze And Decide: After sufficient traffic, check uplift, confidence, and secondary signals like scroll depth. Ship the winner, archive the test, and log the decision for future reference.

Last Thoughts

In production sites, experiments are a system, not a stunt. Clear hypotheses, disciplined scope, and reliable measurement produce repeatable gains.

When stack fit favors native options, Webflow Optimize and Optibase reduce friction and speed learning. When scale demands more breadth, platforms like VWO, AB Tasty, and Adobe Target expand reach.